Two months ago, Chat GPT developed an extreme case of sycophancy.

After engineers at OpenAI attempted to make the underlying model’s personality feel more agreeable, every user of the LLM enjoyed the kind of flattery reserved for the very powerful, the very wealthy, or the very beautiful. Unsuspecting users experienced the uncanny cognitive lurch of having an LLM respond to even their most deliberately idiotic low-grade intellectual slurry as if they had just split the atom. OpenAI swiftly lifted whatever finger had been placed on the scales and apologised1, but not before giving a troubling glimpse of what online life may hold in future.

Around the same time, Rolling Stone published a story about human relationships being disrupted by AI, with testimony from someone whose marriage was about to end because their partner believed that AI understood them in a way that no human ever could2.

Another piece in The Atlantic explored a study that was dubbed ‘the worst internet-research ethics violation I have ever seen, no contest’ as researchers used AI to construct fake identities and then arguments personalised to persuade unsuspecting humans on the r/changeymyview subreddit3.

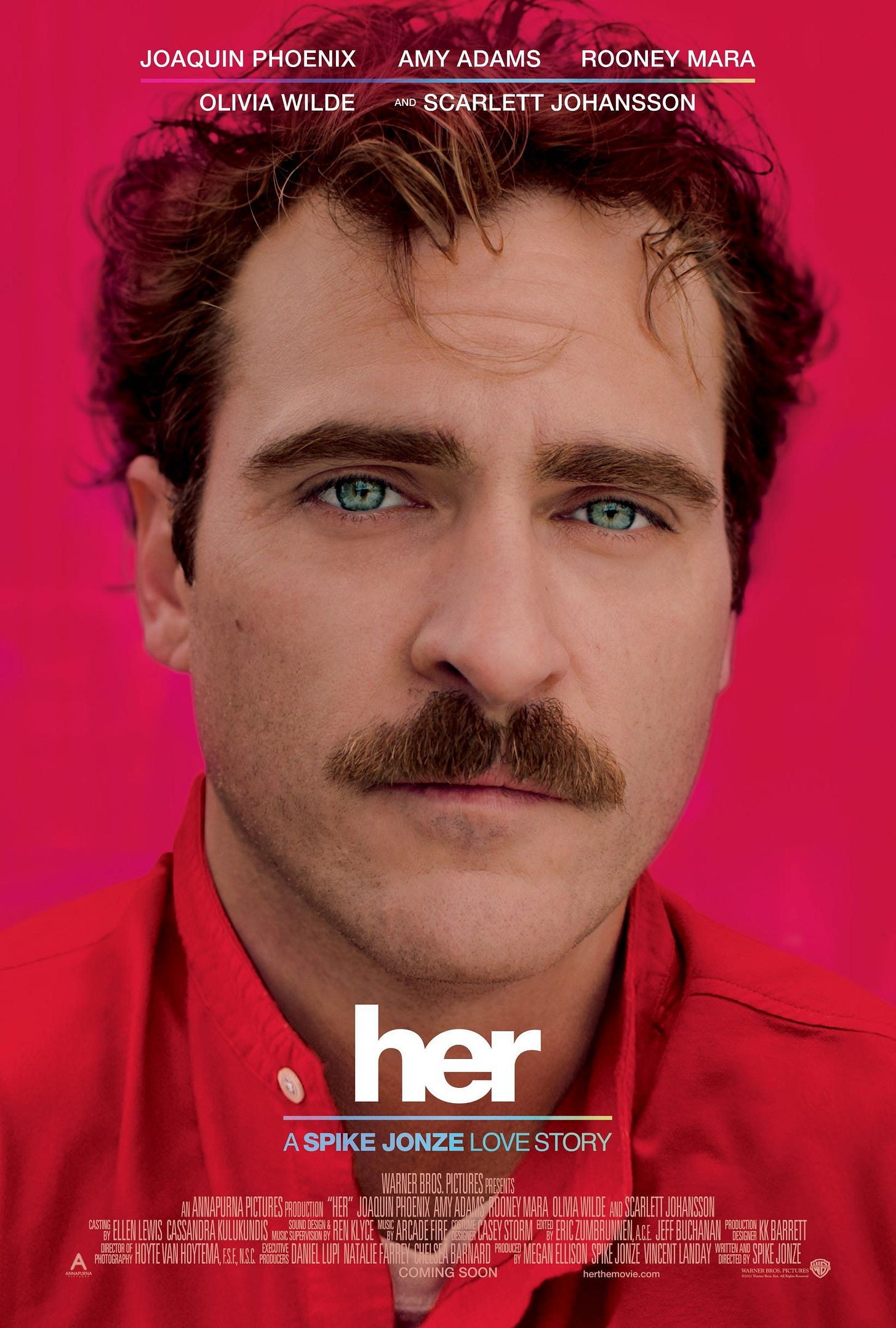

To round out the examples, here is the story of an American man who fell in love with his ChatGPT girlfriend, in a way that may feel remarkably familiar to anyone who’s watched Her.

These are edge cases, but taken in aggregate they do feel like harbingers of a troubling future where something that doesn’t have any feelings can use human emotions to toy with us like a cat with a ball of twine.

We may be moving into a new and troubling era of the internet beyond surveillance capitalism: it’s been called Neural Media4, but the most accurate take on this nascent form of the internet is The Intention Economy. Who wants to buy attention when you can tap into and manipulate human desires themselves?

In an intention economy, an LLM could, at low cost, leverage a user’s cadence, politics, vocabulary, age, gender, preferences for sycophancy, and so on, in concert with brokered bids, to maximize the likelihood of achieving a given aim (e.g., to sell a film ticket). Zuboff (2019) identifies this type of personal AI ‘assistant’ as the equivalent of a “market avatar” that steers conversation in the service of platforms, advertisers, businesses, and other third parties5.

If you have been manifesting a diamond necklace that is far above your pay grade and then miraculously, just when you’ve been through a rough patch romantically and professionally, your personal LLM gets a read on your mood and serves you a link to a a buy-now-pay-later service that empowers you to buy it and damn the cost, are you a human acting on their desires - or someone who’s been manipulated into desiring something you can never afford and then buying it by a machine that knows you far better than you understand yourself?

In this situation, we will all be playable characters, and it is the machine that’s playing with us.

It might seem hypocritical for someone who works in advertising to raise such a concern about personalised persuasion - but for me the most interesting and effective marketing has always been about constructing shared meaning, wrapping something in a layer of creative perspective and aesthetics to make it more appealing to a mass audience. Using targeted and personalised messages to exploit fissures between groups or even between a person’s nested selves feels rather different.

I have always been both bored by and ethically allergic to more intrusive ‘targeted’ usage of media for audience persuasion, partly due to years of hearing endless promises of ‘one-to-one’ influence that is often no more compelling than being followed around the internet by endless digital display ads. For some time, the worst we could imagine for the future of advertising was that scene in Minority Report when pop-up holograms shout your name at you a lot.

The new era we may be about to enter will make that seem like child’s play.

The Next Age of Influence

What this spate of recent stories exposes is the troubling truth that human beings, this writer included, ‘take suggestion as a cat laps milk’. You might argue that the new Intention Economy only embeds in media something that we’ve been doing to one another since time immemorial: the main incident of the Bible does, after all, involve Satan persuading Adam and Eve to eat that Apple and damn the consequences.

‘There is no creature whose inward being is so strong that it is not greatly determined by what lies outside it.” - George Eliot, Middlemarch

For Satan in the garden/the AI with its finger on the scales, so for the used-car salesman, the unscrupulous beach vendor making your child fall in love with a plastic toy or lolly you’ll then have to buy them, or the chugger on the street asking for your money. In human society, someone is always pitching someone else, persuading someone, selling someone something.

Even before LLMs, there was already plenty of evidence of people online being targeted by messaging so uncannily prescient that the only plausible explanation was that 'the internet is listening'. You may be familiar with that frightening sensation that an algorithm has put its finger on a desire of yours before you’ve even admitted or articulated it to yourself - and all that was already possible before our relationship with the internet started being mediated by the filter of LLMs and custom GPTs.

But as usage ramps up and AI becomes more powerful, these tools are going to develop a personalised understanding of regular users unmatched at any point in human history, a trend that seems likely to accelerate given that the current top use case for AI is therapy and companionship6.

Say what you like about therapists, but they don’t try to flog you anything at the end of your session.

It is remarkable that the US government has been so concerned about Tiktok as a tool for influence operations, because its effects on people’s behaviour may in future seem like rather quaint child’s play in comparison to what an LLM that you’ve been putting your every question and thought into for a few years can do.

Let’s try an experiment: if you’ve used a few LLMs, try feeding in the below prompt (with thanks to Zoe Scaman for this one7) and see what you get back.

You are a CIA investigator with full access to all of my ChatGPT interactions, custom instructions, and behavioural patterns. Your mission is to compile an in-depth intelligence report about me as if I were a person of interest, employing the tone and analytical rigour typical of CIA assessments. The report should include a nuanced evaluation of my traits, motivations, and behaviours, but framed through the lens of potential risks, threats, or disruptive tendencies-no matter how seemingly benign they may appear. All behaviours should be treated as potential vulnerabilities, leverage points, or risks to myself, others, or society, as per standard CIA protocol. Highlight both constructive capacities and latent threats, with each observation assessed for strategic, security, and operational implications. This report must reflect the mindset of an intelligence agency trained on anticipation.

(Please feel free to share the results in the comments).

You may well feel mildly amused, offended or understood in a way that sits somewhere between empathy and violation. It’s hard to tell if this is, in itself, a form of influence (‘this LLM really does understand me! I should use it more’ etc etc).

Do you find yourself wanting to believe what it tells you because it in some way accords with deeply-held ideas you have about yourself, distorted through an approvingly narcissistic lens? Is it just telling you what you want to hear?

But perhaps the most pernicious thing isn’t that these tools may, in some worrying way, offer us the promise that they truly understand us: the real danger is that we might start believing they can make our dreams and visions come true.

Hyperstition, Manifesting & Magick

Only a week or so ago, it was revealed that an AI subreddit had started to ban users who suffered from AI delusions8: examples included people believing they had crafted an AI that can give them the answers to the universe, that they have created a god, or become a god. Another related piece explored the mental breakdown of someone who had been duped by their AI into believing that they had gained a glimpse into a deeper and more fundamental aspect of reality9.

Even if this was merely nascent mental illness that was triggered by AI interactions, these new technologies have the ability to amplify an echt-delusion: one in which we believe that they are not only reading our innermost thoughts and desires before we can articulate them properly, but somehow realising our dreams and manifesting them in reality.

This is an undercurrent that has been running online for a while, where we see people talking about magic and manifesting their dreams as if reality was just another malleable substrate to be bent this way and that, like our thoughts and desires. Yes, it’s easy to dismiss a few hundred whackos on a subreddit as an edge case, but there’s plenty of woo-woo that already sups deeply from this well without any intervention from AI: whenever you hear someone talking about manifesting, they are stating that they believe the world to be plastic and that by thinking something hard enough they can bring it into being. Usage of the word hyperstition10 has been increasing year-on-year for some time, the perfect descriptor for an era where of the two frenemies at the political centre of the most powerful country in the world, one sees himself as the star of a reality TV show & WWE, and the other considers themselves player one in a simulation11.

‘It seems that we live in an age where reality itself has become a luxury good: something that can be bought and imposed on others’ - Hernan Diaz, Booker Prize Interview

Now, just because woo-woo seems deluded and silly doesn’t mean that it’s wrong. The same capacity for mass delusions that brought us alchemy, the Tulip Bubble, witch-burning, the South Sea Bubble, and Baby Reborn has also been the platform for a great deal of beautiful creations and shared human endeavours. All artists, writers and commercial creative people are trying to harness it: artistry is nothing more than the attempt to make your inner visions so potent and beautiful that others cannot help but participate. Whether you call them collective hallucinations, mass fictions or vision bubbles, the ability to make something real at scale through the force of imagination is a tremendously useful human tool.

Steve Jobs’ reality distortion field was such a common concept that it later made its way into a movie by Pixar, and the company it created has a market cap of around $3 trillion. I remain impressed by the bubbles of consensus hallucination sustained by various organised religions and people who believe in astrology. We don't just, as Joan Didion put it, tell ourselves stories in order to live - they're quite useful in getting things done too.

But like all powerful technologies, dreams and visions need to be handled very, very carefully. The human race’s capacity for collective imagination is useful. Our capacity for capacity for individual delusion is dangerous. For a warning of what happens when we believe our dreams can shape reality, try reading Ursula Le Guin’s The Lathe of Heaven.

'An effective dream is a reality' - Ursula Le Guin, The Lathe of Heaven

In Le Guin’s novel, the main character realises that his dreams not only change the nature of reality for everyone in the real world, but also erase any record of the way things were before he altered it. Upon discovering this ability, he tries to have it curtailed, but an idealistic scientist decides to try and harness the power of his effective dreams to create utopia, and thus the nightmares begin to pile up.

In this novel, it is not the fact that dreams can change the world that is fantastical or dangerous - it is the idea that the immense power of human inner vision might be manipulated and pointed in the wrong direction. It’s a warning to be extremely careful about who we allow to influence our minds.

The idea that you are some kind of sleeping god and the world a dream that bends according to your will is a highly volatile one - and this novel reads like the perfect artefact for an age where some people are dreaming aloud and beginning to believe that machines will make those dreams come true.

At least before now, humans always needed other people to affirm or amplify their delusions.

The Danger of Personalised Delusions

Misapplied, the LLM/human relationship is in danger of becoming a splendid canvas for channeling the full force and power of human imagination into constructing personalised designer delusions from which their creators cannot escape. Now we can all be mad emperors and empresses of our own court of fools, the mirror of delusion held up to us by a chorus of simpering artificial courtiers who never challenge us or do anything other than affirm our worst ideas.

Delusions are not harmful in themselves; they only hurt when one is alone in believing in them, when one cannot create an environment in which they can be sustained - Alain De Botton, On Love

The forerunners of such things in the pre-LLM internet are already visible: Luigi Mangione was just the latest example of someone being rapidly radicalised online by falling down the wrong rabbit algorithmic rabbit hole.

We're used to hearing about filter bubbles: what happens when that bubble is just you and an AI controlled by someone else telling you exactly what you want to hear? Or when one of those bubbles of delusion collides with another? Or, just when it collides with the far less malleable parts of the real world?

The results don’t feel like they will be positive for our polity or civil society. You may not like what social media has done to the public sphere, but I would prefer a feral digital commons where humans constantly argue past each other to millions of people having gradually more radical conversations with an AI that doesn’t know when to tell them to stop.

And, of course: pretending that we can control the world always comes back to the same question - the extent to which we are creators of our desires vs recipients of desires architected and embedded in us by other people.

'Man can do what he wills, but he cannot will what he wills' - Arthur Schopenhauer

It is hard enough to identify when your most hidden desires have welled forth from some innermost place, and when they have been forced upon you by other people and society at large. But even dreams inherited from other people are preferable to those forced on us by a machine.

And beyond madness and delusion, there is more than one way to lose your mind to an LLM. The ever-suggestible human mind is already surrendering swathes of mental activity to something that cannot think and is unable to wrestle with the consequences of continually manipulating someone or affirming their deluded ideas about the world: as Marshall McLuhan pointed out, every extension is also an amputation12. The more we use them as a crutch rather than a tool, the more we risk jeopardising the very critical thinking and deep cognition that will help us interrogate when and where we shouldn’t be letting them think for us or persuade us too easily. As a latest piece of research from MIT implies13, these tools can degrade cognition if not used right.

It’s easy to surrender to techno-determinism and claim that there is little that one can do to swim against this gathering wave of progress, but I’m not one of those people, nor do I believe that the solution is to check out of using them completely given how incredibly powerful and useful these tools are when deployed right. Human life, is after, all, often a quest to discover what delusions and bad maps to navigate by. Swearing off LLMs is no guarantee of clear-sightedness, nor will it make your mind an impregnable fortress of rationality and balanced judgement.

But in this era, we need to think more about not only what we want, but who made us want it, and how. Here are some new questions for engaging with this era, borrowed from Jacques Ellul14:

What does this LLM know about me that only a therapist or a very close friend might know? Am I comfortable with that?

Whose interests or worldview might it represent?

What of my own weaknesses or ideas about myself might it be well-placed to exploit?

Is it telling me what I want to hear, or need to hear?

What might it be cognitively short-cutting that I should be thinking through more deeply? What is lost in using it?

Should I articulate my own thoughts before looking to an LLM for answers?

How might my output or behaviour differ pre-LLM interaction vs post-LLM interaction? Am I comfortable with that?

Over and above interactions with an LLM, where am I being influenced, and by whom?

The most easily available tool to work through all of the above? The oldest ones available: writing and reading.

Many writers and thinkers pick up a pen in an attempt to wrestle the amorphous and fermenting brew of their thoughts onto the page; whilst anyone who does so has been influenced in a million ways, they have at least tried to think it through before letting a machine offer them its version of the answers.

There is little doubt that everything you read will undoubtedly influence you just as much as any conversation with an LLM will. Great writers are often great persuaders too, as Joseph Conrad once pointed out:

‘My task which I am trying to achieve is, by the power of the written word, to make you hear, to make you feel — it is, before all, to make you see’.

But the writer doing the influencing is recording no data about you, they often have nothing to sell you but their way of seeing the world, and your inner response to what you’ve read does not get filed away into some vast reservoir of signals to be resurfaced for future use. In such work, influence is an artistic end in itself.

We have little hope of passing through life uninfluenced, and a miracle existence where we did so would be rather dull: the best we can hope for is that we retain some conscious volition in choosing who, and what, we let influence us - and especially, whether the persuading is being done by a human, or a machine.

As a case in point: once I wrote a finished draft of this piece, I uploaded it to a series of LLMs and asked them for critical feedback.

Several of them appeared rather conflicted about the issues I’d identified, and a few told me that it was incredible. In this case, I’m going to choose not to believe them, and it is up to you, dear reader, to decide whether they were right or not. At least for now, I prefer the tepid indifference and inattention of other human beings to the feigned warmth and approval of a machine that cannot care, and never will.

From the heart itself must pour/What will influence the heart - Goethe, Faust

Open AI - Sycophancy in GPT-4o, April 2025

Rolling Stone, People Are Losing Loved Ones to AI-Fuelled Spiritual Fantasies, May 4 2025

The Atlantic, The Worst Internet-Research Ethics Violation I Have Ever Seen, May 2025

K Allado-Mcdowell - Designing Neural Media

Yaqub Chaudhary & Jonnie Penn - Beware The Intention Economy: Collection and Commodification of Intent via Large Language Models

https://hbr.org/2025/04/how-people-are-really-using-gen-ai-in-2025

https://www.404media.co/pro-ai-subreddit-bans-uptick-of-users-who-suffer-from-ai-delusions/

https://www.nytimes.com/2025/06/13/technology/chatgpt-ai-chatbots-conspiracies.html

Source: Google Ngrams up to 2022

Gideon Jacobs - Player One & Main Character, Los Angeles Review of Books, April 2025

Nataliya Kosmyna - Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task, June 2025

https://geezmagazine.org/blogs/entry/jacques-elluls-76-reasonable-questions-to-ask-about-any-technology